About the course

Can a robot truly understand what it sees?

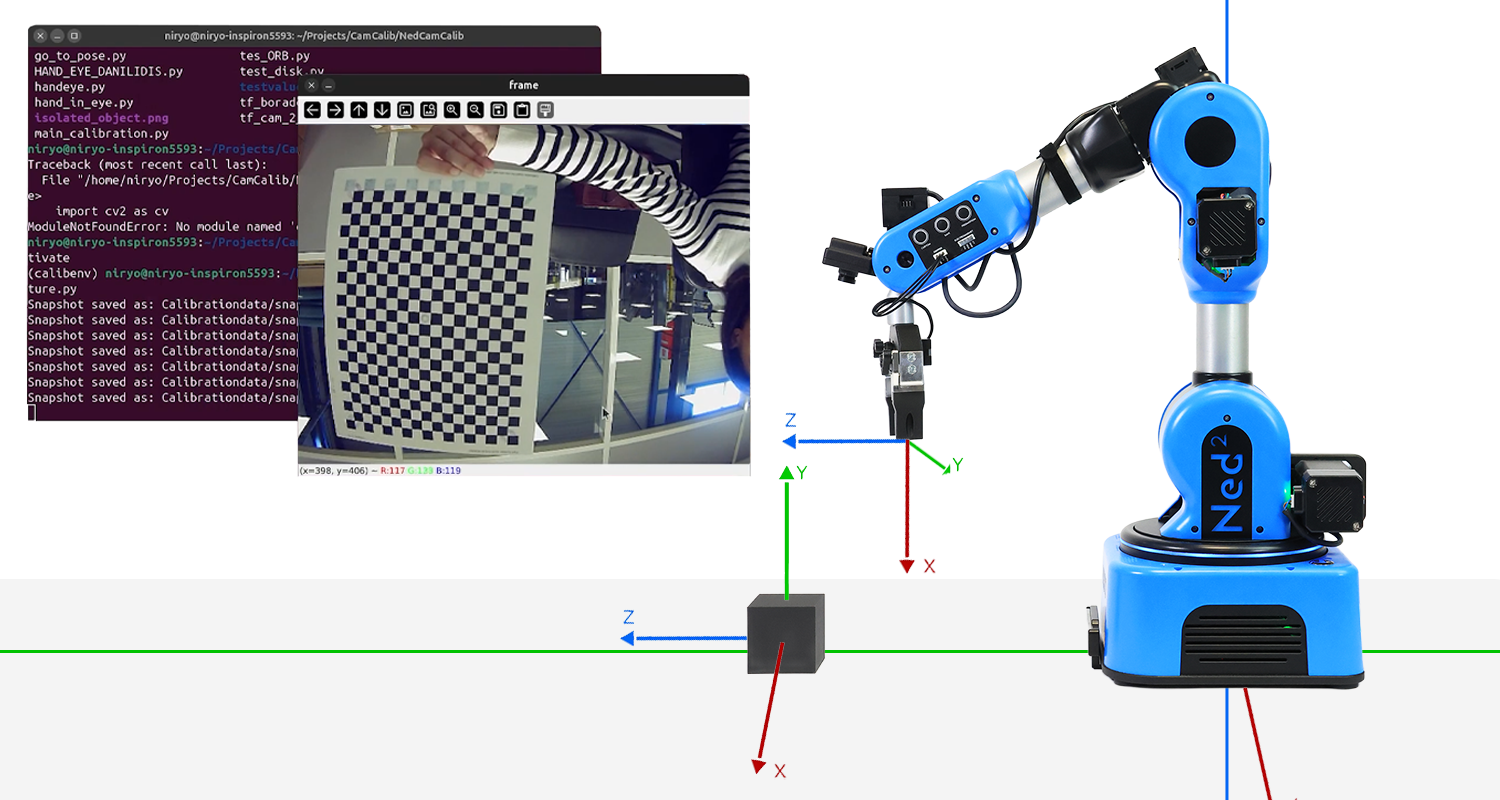

This hands-on lab takes you on a journey from raw images to precise robotic actions. Using only a single camera mounted on the Niryo Ned2, you will build a full perception, pipeline from camera calibration to object detection and real-time grasp execution.

The current vision system relies on a monocular camera mounted on the robot's wrist and a calibrated workspace using visual markers (tags). Here are the technical specifications of this onboard camera:

- Sensor type: IMX322 (CMOS)

- Resolution: 640 × 480

- Pixel size: 12.8 × 11.6 μm

- Field of view (FOV): approximately 104°

- Focal length: approximately 3 mm

This setup, while functional, presents several technical and methodological limitations:

- Need for visual tags to guide the robot.

- Demanding workspace configuration that must remain fixed and precisely calibrated.

- High sensitivity to errors, whether from robot movements or setup changes.

- Vulnerability to lighting conditions, shadows, or reflections.

- Detection limited to a few predefined rigid objects.

When a human looks at an object, they can estimate its position, distance, and orientation almost instinctively. Our brains have been trained since birth to perceive depth, interpret shadows, relative sizes, movements, and reconcile information from both eyes. It’s a rich, complex, yet entirely transparent process for us.

A camera, however, doesn’t see like a human. It captures only a 2D projection of a 3D world. It has no sense of depth or understanding of the scene’s context. Estimating an object’s pose (its position and orientation in space) from a single image is therefore a fundamentally ambiguous and ill-posed task.

This is compounded by several challenges specific to the industrial context of this project:

- Exclusive use of a monocular camera, without direct access to depth.

- Camera is mobile, as it is mounted on the robot, making localization difficult.

- No additional sensors (stereo, LIDAR, etc.).

- Camera noise, lighting, resolution.

In response to these challenges, the goal of this lab is to build a robust, reliable, and coherent image processing pipeline in which the only source of information is a monocular onboard camera—and the end goal is to guide a robotic arm to interact correctly with one or more objects in its environment.

The lab thus aims to design a system that:

- Operates without a preconfigured workspace or visual tags,

- Adapts to a mobile, onboard camera,

- Enables flexible recognition of a wide range of objects using their CAD models,

- Provides 6D pose estimation (position + orientation) in the robot's base frame,

- Detects multiple objects simultaneously with dynamic selection,

- Remains robust to environmental variations.

What you'll learn

- Understand how a monocular camera perceives a 3D environment and how to calibrate it.

- Learn to estimate the 6D pose of an object from a single image using solvePnP.

- Apply hand-eye calibration to link perception and action.

- Master coordinate frame transformations for robotic manipulation.

- Build a complete perception-to-action pipeline for object grasping.

- Gain a practical methodology for solving real robotics problems in industry or research.

Course content

About the Author

Enrolment options

Vision Pipeline: From Camera to Grasping with the Niryo Ned2

- Length: 16h

- Content Type: Lab

- Programming: Python

- Equipment: Ned2

Can a robot truly understand what it sees?

This hands-on lab takes you on a journey from raw images to precise robotic actions. Using only a single camera mounted on the Niryo Ned2, you will build a full perception, pipeline from camera calibration to object detection and real-time grasp execution.

The current vision system relies on a monocular camera mounted on the robot's wrist and a calibrated workspace using visual markers (tags). Here are the technical specifications of this onboard camera:

- Sensor type: IMX322 (CMOS)

- Resolution: 640 × 480

- Pixel size: 12.8 × 11.6 μm

- Field of view (FOV): approximately 104°

- Focal length: approximately 3 mm

This setup, while functional, presents several technical and methodological limitations:

- Need for visual tags to guide the robot.

- Demanding workspace configuration that must remain fixed and precisely calibrated.

- High sensitivity to errors, whether from robot movements or setup changes.

- Vulnerability to lighting conditions, shadows, or reflections.

- Detection limited to a few predefined rigid objects.

When a human looks at an object, they can estimate its position, distance, and orientation almost instinctively. Our brains have been trained since birth to perceive depth, interpret shadows, relative sizes, movements, and reconcile information from both eyes. It’s a rich, complex, yet entirely transparent process for us.

A camera, however, doesn’t see like a human. It captures only a 2D projection of a 3D world. It has no sense of depth or understanding of the scene’s context. Estimating an object’s pose (its position and orientation in space) from a single image is therefore a fundamentally ambiguous and ill-posed task.

This is compounded by several challenges specific to the industrial context of this project:

- Exclusive use of a monocular camera, without direct access to depth.

- Camera is mobile, as it is mounted on the robot, making localization difficult.

- No additional sensors (stereo, LIDAR, etc.).

- Camera noise, lighting, resolution.

In response to these challenges, the goal of this lab is to build a robust, reliable, and coherent image processing pipeline in which the only source of information is a monocular onboard camera—and the end goal is to guide a robotic arm to interact correctly with one or more objects in its environment.

The lab thus aims to design a system that:

- Operates without a preconfigured workspace or visual tags,

- Adapts to a mobile, onboard camera,

- Enables flexible recognition of a wide range of objects using their CAD models,

- Provides 6D pose estimation (position + orientation) in the robot's base frame,

- Detects multiple objects simultaneously with dynamic selection,

- Remains robust to environmental variations.

- Enrolled students: 85