Vision

Courses tagged with "Vision"

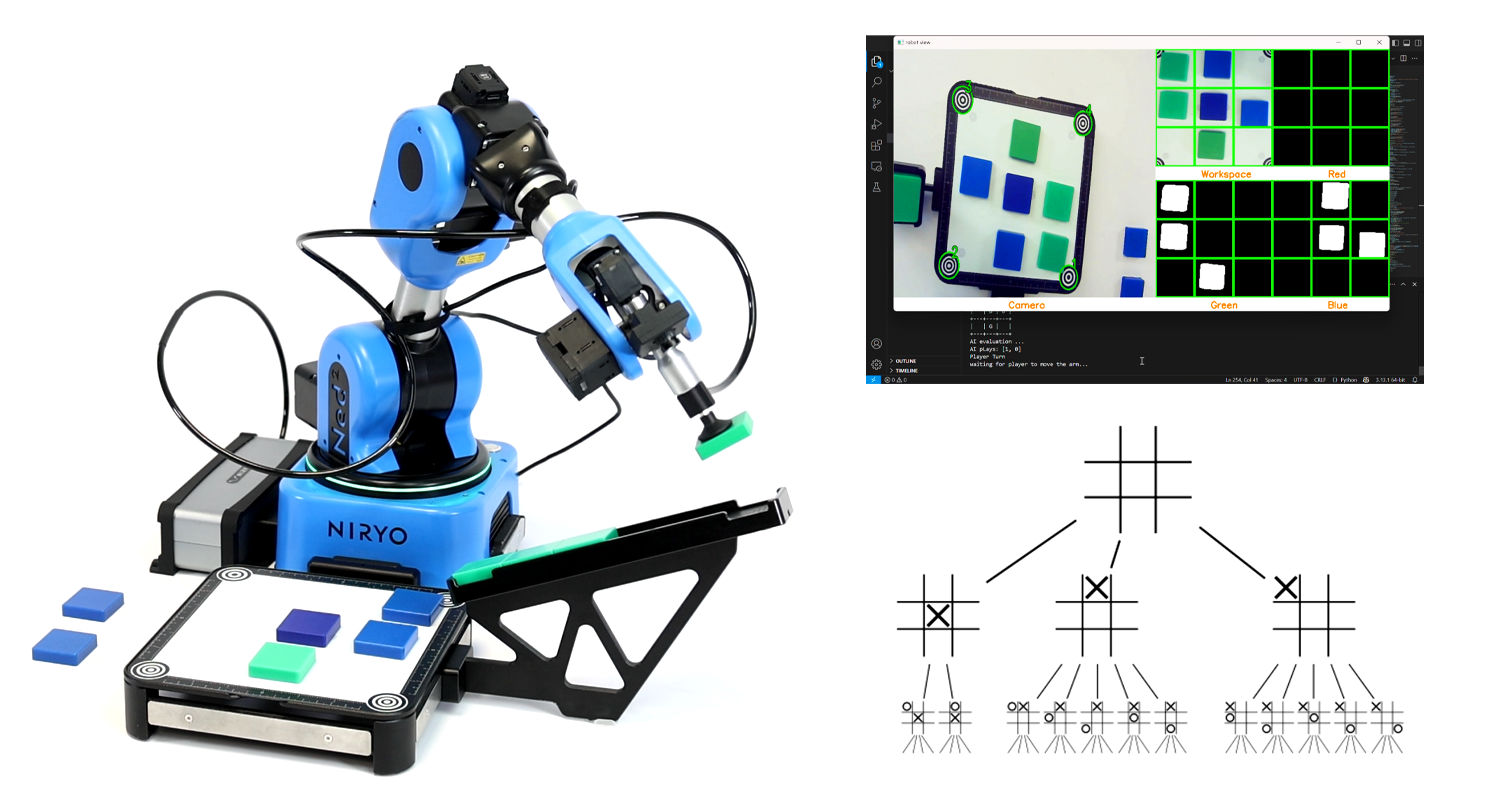

Play Tic Tac Toe

- Content Type:Tutorial

- Programming:Python

- Equipment:Ned2

- Enrolled students: There are no students enrolled in this course.

Visual picking with Artificial Intelligence using TensorFlow

- Content Type:Tutorial

- Programming:Python

- Equipment:Ned2

- Enrolled students: There are no students enrolled in this course.

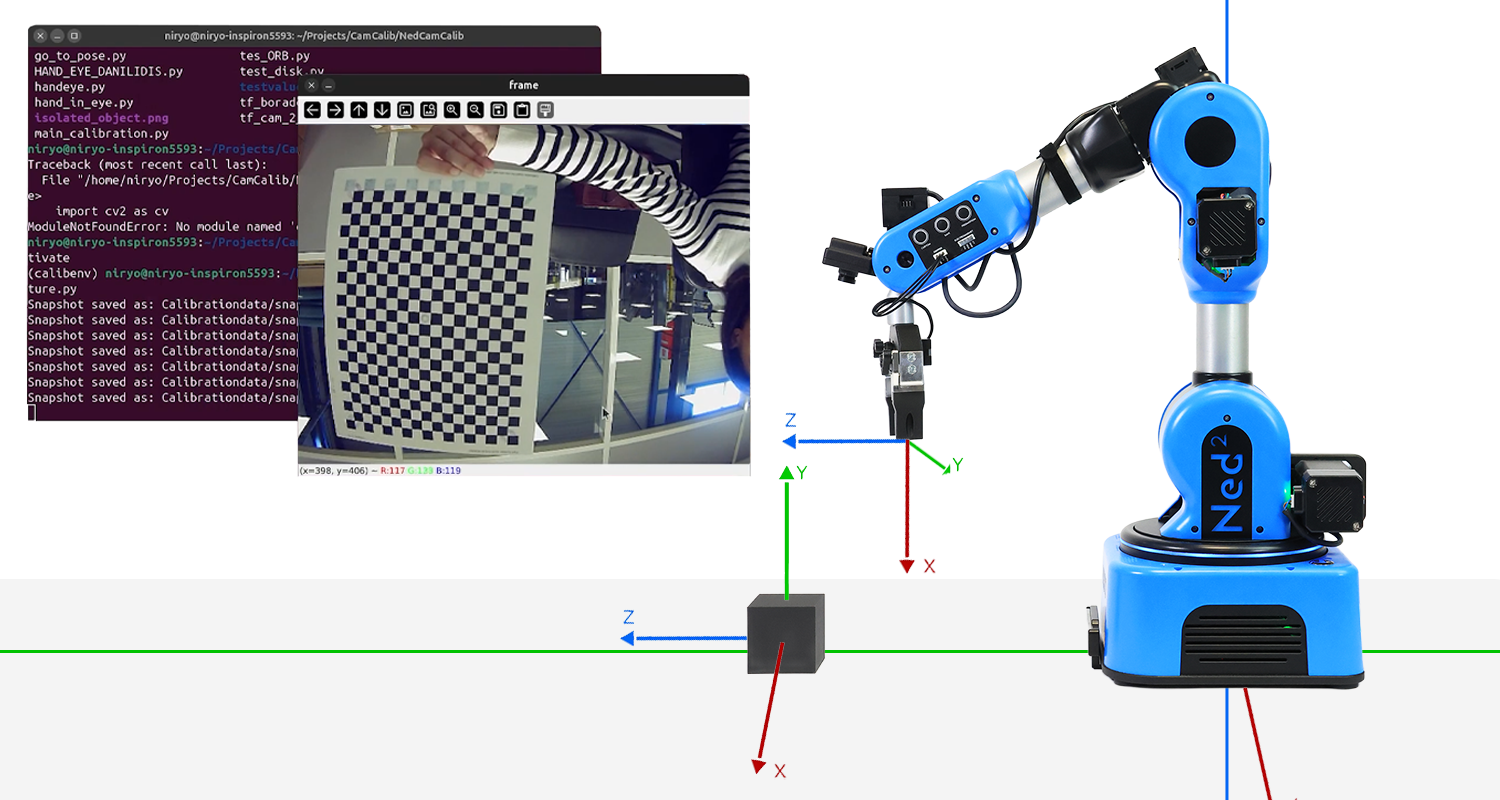

Vision Pipeline: From Camera to Grasping with the Niryo Ned2

- Length:16h

- Content Type:Lab

- Programming:Python

- Equipment:Ned2

Can a robot truly understand what it sees?

This hands-on lab takes you on a journey from raw images to precise robotic actions. Using only a single camera mounted on the Niryo Ned2, you will build a full perception, pipeline from camera calibration to object detection and real-time grasp execution.

The current vision system relies on a monocular camera mounted on the robot's wrist and a calibrated workspace using visual markers (tags). Here are the technical specifications of this onboard camera:

- Sensor type: IMX322 (CMOS)

- Resolution: 640 × 480

- Pixel size: 12.8 × 11.6 μm

- Field of view (FOV): approximately 104°

- Focal length: approximately 3 mm

This setup, while functional, presents several technical and methodological limitations:

- Need for visual tags to guide the robot.

- Demanding workspace configuration that must remain fixed and precisely calibrated.

- High sensitivity to errors, whether from robot movements or setup changes.

- Vulnerability to lighting conditions, shadows, or reflections.

- Detection limited to a few predefined rigid objects.

When a human looks at an object, they can estimate its position, distance, and orientation almost instinctively. Our brains have been trained since birth to perceive depth, interpret shadows, relative sizes, movements, and reconcile information from both eyes. It’s a rich, complex, yet entirely transparent process for us.

A camera, however, doesn’t see like a human. It captures only a 2D projection of a 3D world. It has no sense of depth or understanding of the scene’s context. Estimating an object’s pose (its position and orientation in space) from a single image is therefore a fundamentally ambiguous and ill-posed task.

This is compounded by several challenges specific to the industrial context of this project:

- Exclusive use of a monocular camera, without direct access to depth.

- Camera is mobile, as it is mounted on the robot, making localization difficult.

- No additional sensors (stereo, LIDAR, etc.).

- Camera noise, lighting, resolution.

In response to these challenges, the goal of this lab is to build a robust, reliable, and coherent image processing pipeline in which the only source of information is a monocular onboard camera—and the end goal is to guide a robotic arm to interact correctly with one or more objects in its environment.

The lab thus aims to design a system that:

- Operates without a preconfigured workspace or visual tags,

- Adapts to a mobile, onboard camera,

- Enables flexible recognition of a wide range of objects using their CAD models,

- Provides 6D pose estimation (position + orientation) in the robot's base frame,

- Detects multiple objects simultaneously with dynamic selection,

- Remains robust to environmental variations.

- Enrolled students: 44

Add-on ROS2 : Enhance your simulated Niryo Robot for Dynamic Obstacle Avoidance

- Content Type:Add-on

- Programming:ROS2

- Equipment:Ned2 + Vision Set

This ROS2 add-on adds obstacle avoidance capabilities to the simulated Niryo NED2 robot. Using Gazebo and ROS2, you’ll learn how to simulate dynamic environments and make the robot navigate safely around obstacles. The add-on focuses on integrating basic sensors, processing environmental data, and implementing reactive behaviors. It’s a practical extension for testing navigation logic and improving robot autonomy in complex settings. Let’s make your robot smarter in simulation.

This course provides a structured and hands-on approach to advanced simulation and manipulation using ROS2 and Gazebo Sim. Throughout the course, you will learn to:

-

Create an engineering plan to solve a robotics problem efficiently.

-

Simulate depth cameras and distance sensors in Gazebo Sim for realistic environment interaction.

-

Work with SDF and URDF files to define and adjust robot and environment models.

-

Understand and use ROS2 messages for inter-node communication.

-

Establish communication between ROS2 and Gazebo Sim to synchronize simulation and control.

-

Use the Octomap plugin with MoveIt2 to generate 3D maps and support motion planning.

-

Create and manage YAML parameter files for modular and reusable configuration.

-

Develop a test strategy to validate functionalities and ensure system robustness.

- Enrolled students: 19

CTE : Introduction to Robotics, Blocks Programming, and Automation

- Length:35h

- Content Type:Curriculum

- Programming:Blockly

- Equipment:Bundle discovery

Unlock the world of robotics with our comprehensive curriculum, designed for students and educators looking to explore the fundamentals of automation, programming, and robotic applications. This course takes learners from the basics of robotics history to hands-on programming and industrial automation using the NED2 collaborative robot (cobot) and Blockly programming.

Who Is This Course For?

✔️ High school and college students in CTE or STEM programs

✔️ Educators looking for a structured robotics curriculum

✔️ Beginners eager to learn robotic automation with a no-code approach

✔️ Anyone interested in industrial robotics and automation

Join today and take your first step toward mastering robotics and automation! 🚀

- Enrolled students: 67

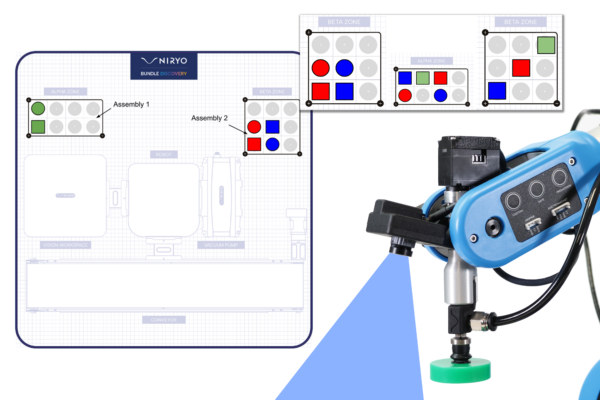

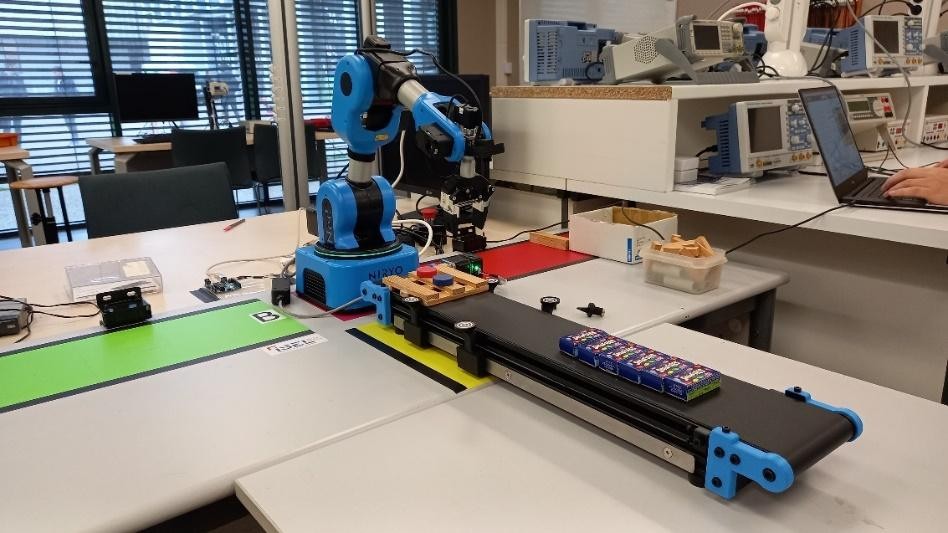

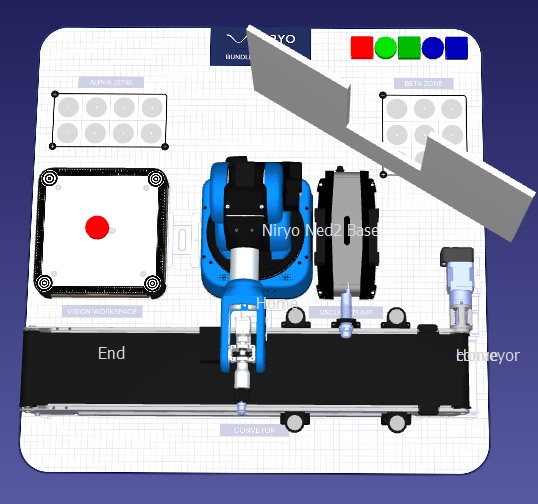

Bundle Discovery : Order Assembly and Preparation

- Length:6h

- Content Type:Lab

- Programming:Python

- Equipment:Bundle discovery

Scenario

We want to carry out an assembly that involves arranging specific manipulable objects (shape and color) in the ALPHA and BETA zones, in a pattern defined by the user. In order to carry out this assembly, manipulable objects are fed to the robotic arm NED2 by a conveyor. The robotic arm NED2 then identifies the parts before picking them up. If the manipulable object picked up is not required for the pattern, it will be deposited it in the waste area (rejects), otherwise it will be placed it in the ALPHA or BETA zones, following the given pattern.

Lab Contents

Chapter 1: Pick and place

- Define reference points and points of interest in the robotic arm NED2 workspace.

- Create the sequence of movements for a pick-and-place operation.

- Perform a palletizing operation.

Chapter 2: Defining a pattern

- Create an operator input and pattern creation function.

- Determine whether a manipulable object belongs to an existing pattern.

- Trigger the corresponding action for a manipulable object according to the following cases: not belonging to a pattern, belonging to a pattern and already processed, belonging to a pattern and not processed.

Chapter 3: Vision

- Feed the manipulable object via the conveyor

- Master the results of camera object detection: shape, color and position of a manipulable object in a defined workspace.

- Pick up a manipulable object identified by the camera.

Chapter 4: Integration

- Automatically produce a pattern entered by an operator, using manipulable objects supplied by the conveyor.

Prerequisite knowledge

Python: Basic syntax + simple data and control structures + loops + simple function calls

Required components

- Enrolled students: 55

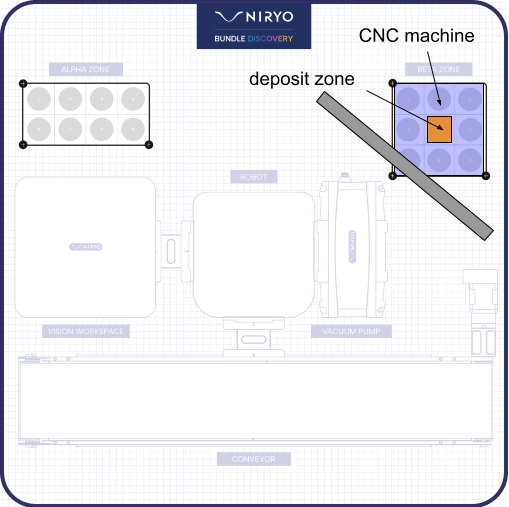

CNC Machine Tool Loading and Unloading

- Length:8h

- Content Type:Lab

- Programming:Python

- Equipment:Bundle discovery

We want to create a loading and unloading scenario for a machine tool. The robotic arm NED2 will have to coordinate with a virtual machine tool to enable a part to be loaded into the machine, machined, unloaded onto the conveyor and then cleared from the area by the conveyor. The machine tool will simulate machining and cleaning cycles in a loop, and the robotic arm NED2 will act in coordination.

Laboratory Contents

Chapter 1: Pick and place

- Practice the notions of reference points and points, associated with a tool and a base, in robotics.

- Understand and master articular and linear movement sequences.

- Perform pick-and-place operations in a workspace using a specific tool.

Chapter 2: Vision

- Understand and control an RGB camera's settings in a specific environment.

- Put into practice object detection with the camera.

Chapter 3: Generating trajectories

- Create the virtual environment of a robotic cell using robotic simulation software.

- Simulate a trajectory to check its feasibility and extract the information needed to program a robot.

- Compare robotic arm NED2 trajectories with simulation and optimize.

Chapter 4: Synchronization with a PLC

- Integrate operator controls into the robotic arm NED2’s environment.

- Be able to simulate robotic arm NED2’s operations with on-screen displays to develop complex behaviors.

- Deepen your programming knowledge with thread implementation in Python.

- Apply the synchronization of the robotic arm NED2's movements with external commands.

- Learn how to define the main stages of a machine tool loading and unloading operation.

Chapter 5: Integration

- Be able to create partial integrations constituting sub-systems.

- Be able to integrate all subsystems.

Prerequisite knowledge

Python: Basic syntax + simple data and control structures + loops + simple function calls

Required components

- Teacher: Pauline Vanpoulle

- Enrolled students: 55

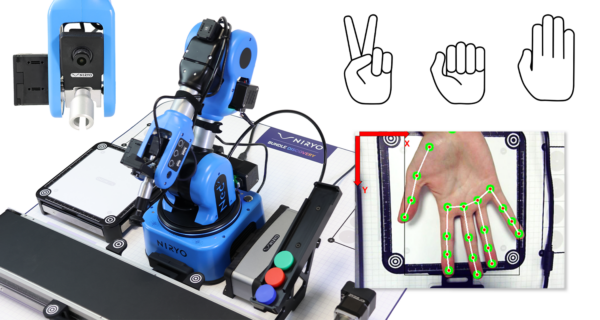

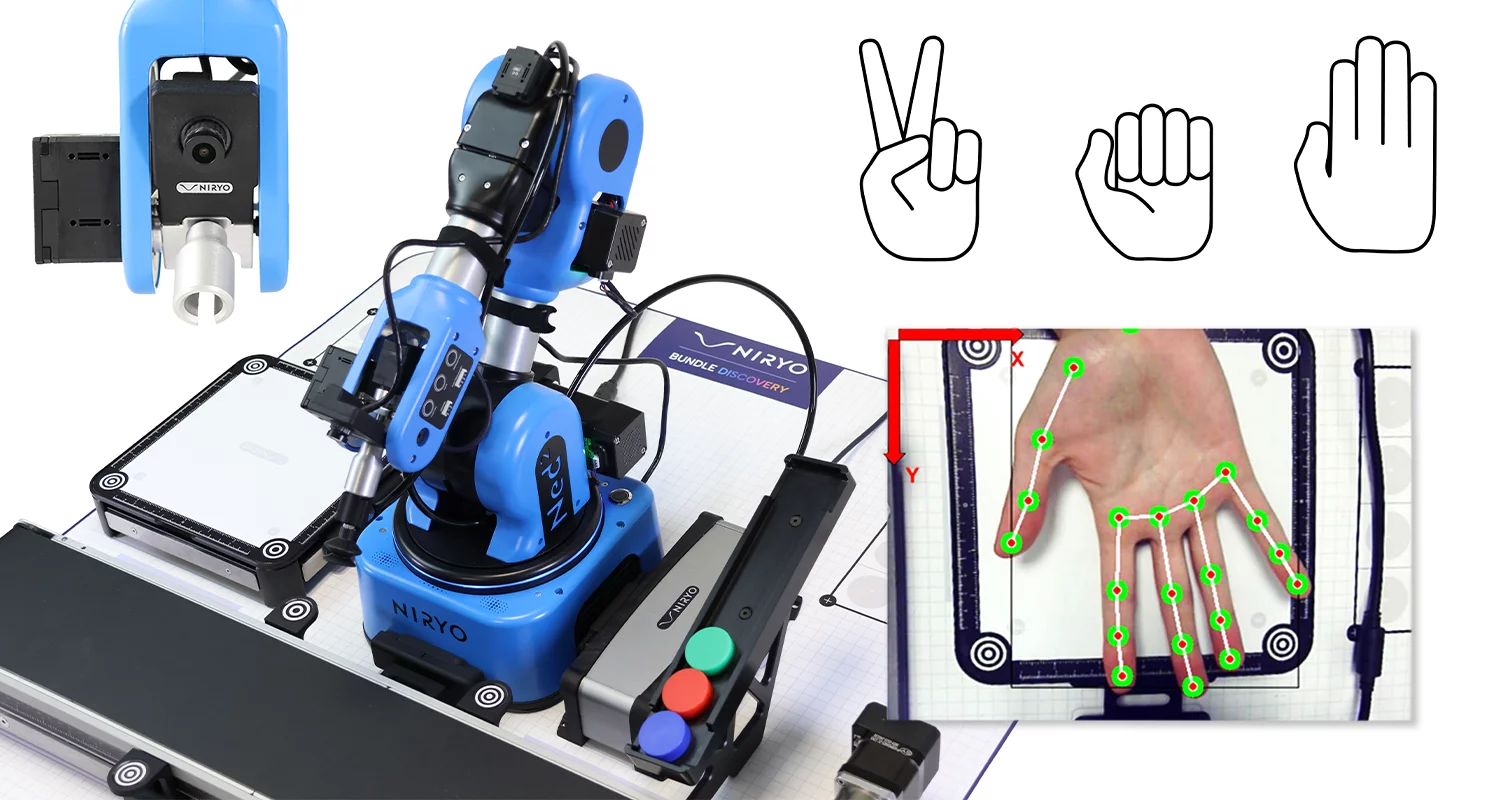

Exploring AI-Driven Collaboration in Robotics

- Length:8h

- Content Type:Lab

- Programming:Python

- Equipment:Bundle discovery

Scenario

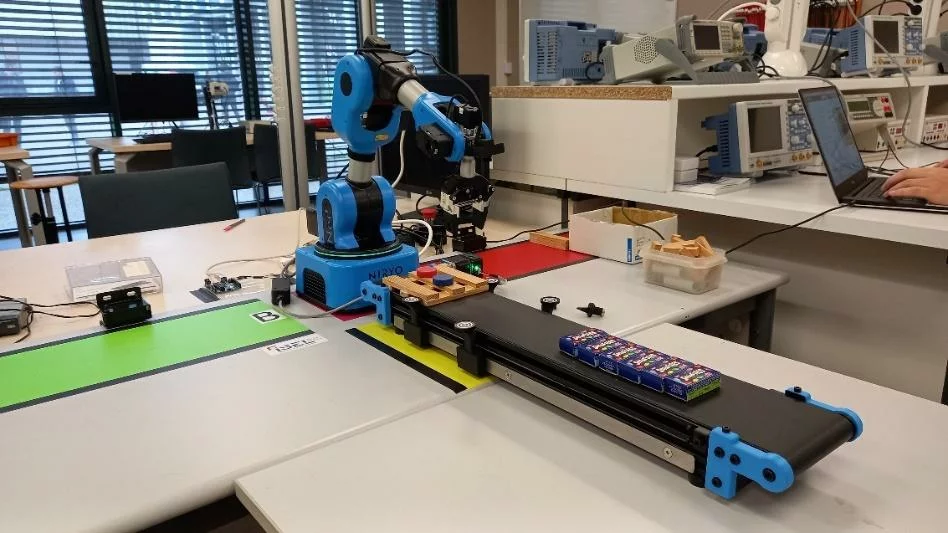

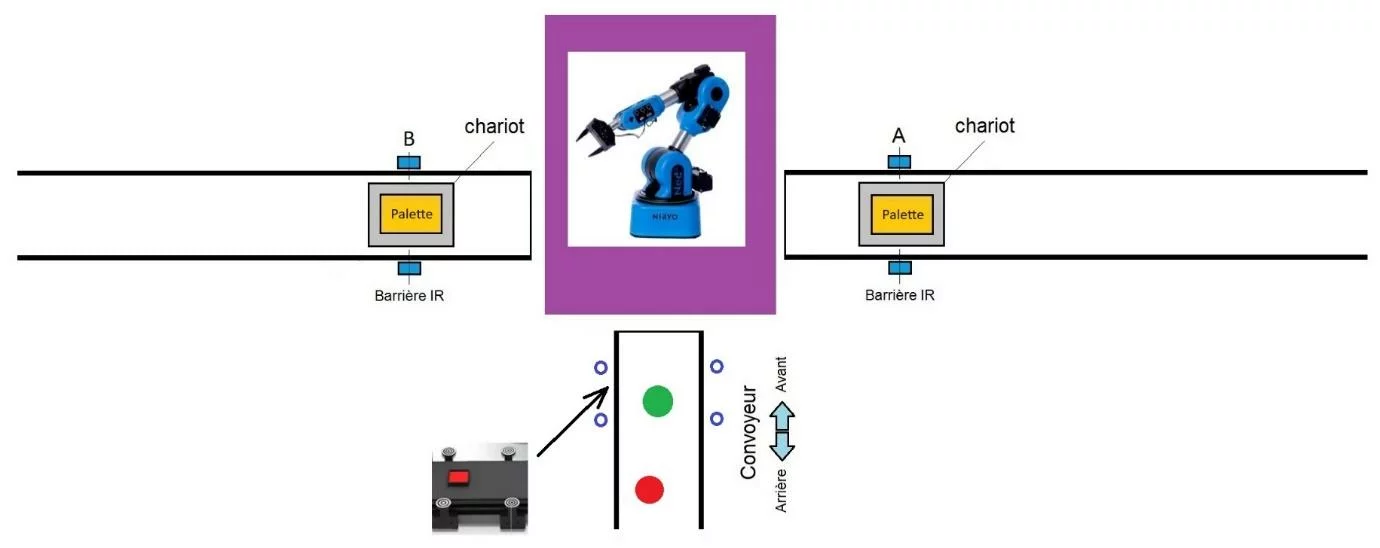

The scenario enables interaction between an operator and a Robotic arm NED2, using interfaces based on artificial intelligence. In particular, the operator can use gestures to designate the part to be picked up by the Robotic arm NED2, one gesture corresponding to a part from a collection arranged in the alpha zone. The Robotic arm NED2 places the gripped part in the operator’s hand, after identifying its position using the vision set camera, above the loading zone. This is a pick-and-place sequence, where the pick and place points are provided in real time by the operator’s gesture commands. Gesture and hand position recognition is performed by deep learning tools.

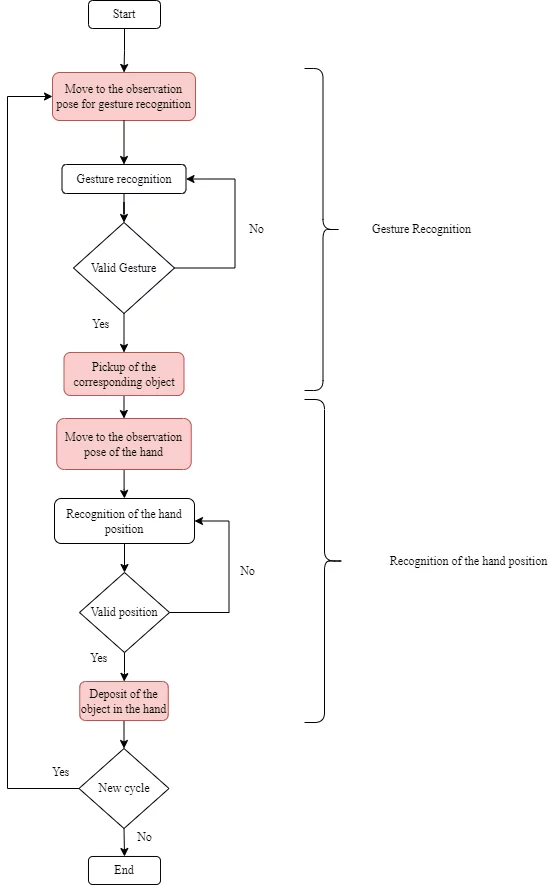

The objective of the scenario is to perform this operation by following the steps illustrated in the following algorithm:

Lab Contents

Chapter 1: Pick and Place

- Define points of interest

- Create movements for pick and place

Chapter 2: Gesture recognition

- Know how to use Teachable Machine to train a model

- Obtain predictions based on gestures

- Create a filter to validate a gesture

Chapter 3: Hand detection

- Detect the hand in the camera image

- Calibrate the camera

- Obtain the coordinates of the drop point in the middle of the hand

Chapter 4: Integration

- Integrate the subprograms into a complex and functional program

Prerequisite knowledge

Python: Basic syntax + simple data and control structures + loops + simple function calls

Reference frames and transformation: Understanding how Cartesian coordinate systems work and the principle of transformations

Required Equipment

- Enrolled students: 55

Sorting of merchandise under the supervision of a Web server

- Length:6h

- Content Type:Lab

- Programming:Python

- Equipment:Bundle STEM

The objective of the Lab is to reproduce a robotic operation of sorting goods and palletizing in a logistics warehouse under the supervision of a web server (figure 1). The goods (red and green pucks) are placed randomly by an operator and transported by the conveyor. Arriving in the gripping zone (figure 2), the conveyor stops and the goods (red and green pucks) are located and identified by the camera placed at the end of the manipulator arm. The two-finger gripper, mounted at the end of the arm, grabs the puck and places it according to its color, on a pallet in zone A (red) or zone B (green) for delivery to two different destinations.

Lab Content

Chapter 1: Server Setup

- Understand the existing links between the Python code and the WEB page

Chapter 2: Controlling the conveyor

- Implementing the Python commands to control the conveyor

Chapter 3: Pick and Place using Vision

- Master the sequence of movements necessary to pick up a part by vision and place it in the dedicated area, without colliding with the environment

Chapter 4: Database

- Develop skills in manipulating databases with Python, and interacting with a user interface to view and export data

Required Equipment

Prerequisite knowledge

Programming :

- Basic notions of algorithms and the Python language

- Using Databases in Python

- Linux commands (if needed)

Networks & Communication :

- Networking Basics

Setup

Software to install:

- NyrioStudio

- Python 3

- Flask, Sqlite3

- Enrolled students: 54